As we talked about in our previous post about MetaHuman, the tools available for game developers today have given us the possibilities to make incredibly lifelike and realistic characters for our video game.

Since our goal is to make our game an entirely immersive experience, we have invested in state-of-the-art equipment that we use in a process called motion capture. This equipment is crucial for our characters so that not only do they look completely lifelike and natural, but they also move and react realistically, both in body language and facial expression.

What is motion capture?

Motion capture – a.k.a. MoCap – is the process of digitally recording face and body movements. What we record with motion capture is transferred directly to how the characters in the game behave or act. We use this process to create various cinematic scenes in the game. We’ve all seen examples of MoCap in AAA games like Uncharted, Assassin’s Creed, Far Cry, etc. But it’s also used in movies. Gollum in Lord of the Rings, for example.

Traditional animation takes a lot of time and expert animators to reach a realistic level of quality. Using motion capture saves us time, which is always a precious resource, and we get the level of realism that we want without having to hire professional animators. Of course, in order to do this, we need the right equipment, so we’ve purchased two sets of motion capture equipment. There are two sensor suits from Xsens and two sets of facial capture from Faceware.

Motion capture recording

We’ve set up a studio where we have a PC which has the required corresponding software. The two sets of software from Xsens and Faceware are synchronized, so we get the recording of both face and body movements at the touch of a button. The actors suit up and act out the scene in much the same way an actor filming a movie would, only now it is just their motion and expressions which are being recorded.

The motion capture editing process

From there we want the in-game animation to match as closely as possible to the actor’s performance, while at the same time enhancing their actions. So, for the animators dealing with the MoCap recording, that means simply working with the performance, cleaning it up, fixing jitters and issues with rotations, and then applying it to the digital character we’ve created.

In practice, the process looks like this:

- Export an .FBX file, which has the data for the body animation, and a .MOV file for the facial animation

- The facial animation will be tracked and exported to Unreal Engine as its own .FBX animation (this process is a story for another day!)

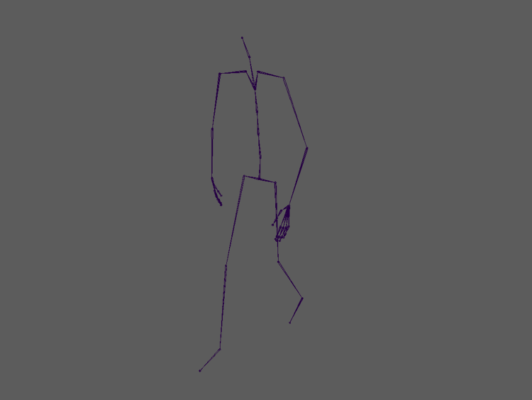

- The body .FBX file, containing a skeleton with animation, is then imported into Maya, which is the 3D computer animation, modeling, simulation, and rendering software. At this point the “skeleton” looks like this:

- We then import a previously created character rig into the same Maya project (see our previous post about how these characters are created)

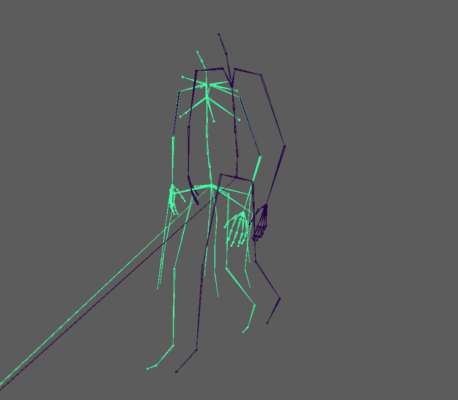

- There are now 2 skeletons in one scene – the .FBX file and the character rig. These do not match in either bone structure or naming conventions, so now we have to get them to match and we do this by using the HumanIK tools in Maya.

- HumanIK maps the Xsens skeleton onto our custom skeleton through a standard bipedal bone hierarchy, allowing the custom skeleton to move as the Xsens (MoCap) skeleton. So now we’ve got something that looks like this:

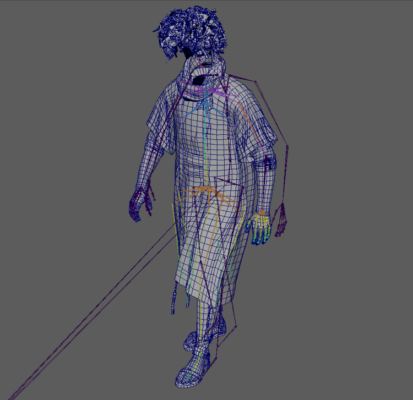

- And we have successfully transferred the animation onto our character!

From here there are still a few things to fix. We delete unnecessary keyframes and we smooth animation curves. We create animation layers to fix and fine-tune the animation, like exaggerating poses and changing timing. The character animation is then baked (fixed to defined keyframes) and exported as a new .FBX file. This file is imported into Unreal Engine and can now be applied to the in-game characters.

We did begin by trying out traditional animation methods, but now that we’ve done both, we can see that this process far outweighs the traditional in terms of both time and results!

A sneak peek of a cut scene in progress